Chronic wound care is a significant health and economic challenge around the world. Unlike many other diseases, chronic wounds can be significantly mitigated through active monitoring. While various techniques exist, the scarcity of extensive and diverse training datasets limit the development and validation of machine learning frameworks.

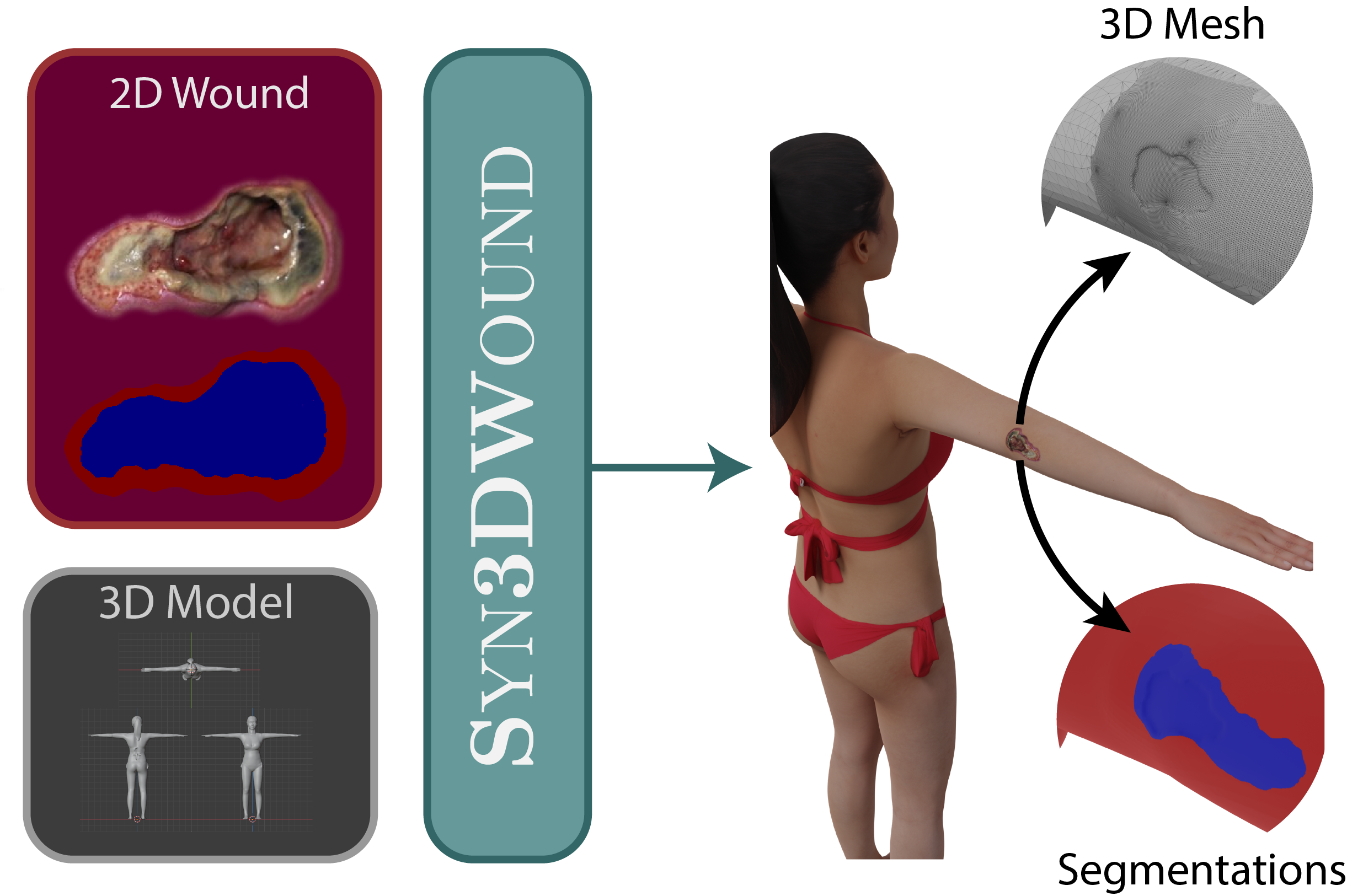

In this work we introduce Syn3DWound, an openly available dataset featuring high-fidelity simulated wounds complete with 2D and 3D annotations. We introduce baseline methods and a benchmarking framework aimed at automating 3D morphometry analysis and 2D/3D wound segmentation.

Evaluation

If you find this work useful, please cite

@article{lebrat2023syn3dwound,

title={Syn3DWound: A Synthetic Dataset for 3D Wound Bed Analysis},

author={Lebrat, L{\'e}o and Cruz, Rodrigo Santa and Chierchia, Remi and Arzhaeva, Yulia and Armin, Mohammad Ali and Goldsmith, Joshua and Oorloff, Jeremy and Reddy, Prithvi and Nguyen, Chuong and Petersson, Lars and others},

journal={arXiv preprint arXiv:2311.15836},

year={2023}

}

Acknowledgment

This research was supported by AI 4 Missions